A new study that analyzed other studies (a meta-analysis) found that the class of flame retardant chemicals called PBDEs (commonly found in furniture and household products) has an effect on children's intelligence, so that it results in a loss of IQ points. Most of the studies looked at the child's exposure to flame retardants during pregnancy and then later IQ. They found that the child's IQ was reduced by 3.70 points for each ten-fold increase in flame retardant levels (thus, the higher the PBDE levels, the greater the effect on the child's IQ). This is of concern because flame retardants are in so many products around us, both in and out of the home. Older flame retardants (PBDEs) were phased out by 2013, but it turns out that the newer replacements (TBB and TBPH, including Firemaster 550) also get into people and also have negative health effects.

A new study that analyzed other studies (a meta-analysis) found that the class of flame retardant chemicals called PBDEs (commonly found in furniture and household products) has an effect on children's intelligence, so that it results in a loss of IQ points. Most of the studies looked at the child's exposure to flame retardants during pregnancy and then later IQ. They found that the child's IQ was reduced by 3.70 points for each ten-fold increase in flame retardant levels (thus, the higher the PBDE levels, the greater the effect on the child's IQ). This is of concern because flame retardants are in so many products around us, both in and out of the home. Older flame retardants (PBDEs) were phased out by 2013, but it turns out that the newer replacements (TBB and TBPH, including Firemaster 550) also get into people and also have negative health effects.

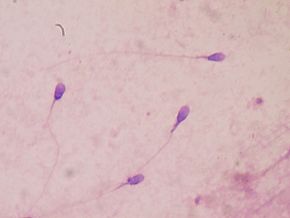

More and more research is finding health problems with flame retardants because they are "not chemically bound" to the products in which they are used - thus they escape over time. and get into us via the skin (dermal), inhalation (from dust), and ingestion (from certain foods and dust on our fingers). And because flame retardants are persistant, they bioaccumulate (they build up over time). They can be measured in our urine and blood. Evidence suggests that flame retardants may be endocrine disruptors, carcinogenic, alter hormone levels, decrease semen quality in men, thyoid disruptors, and act as developmental neurotoxicants (when developing fetus is exposed during pregnancy) so that children have lowered IQ and more hyperactivity behaviors.

Where are flame retardants found? All around us, and in us. They are so hard to avoid because they're in electronic goods, in upholstered furniture, polyurethane foam, carpet pads, some textiles, the foam in baby items (car seats, bumpers, crib mattresses, strollers,nursing pillows, etc.), house dust, building insulation, and on and on. What to do? Wash hands before eating. Try to use a vacuum cleaner with a HEPA filter. Try to avoid products that say they contain "flame retardants". Only buy upholstered furniture with tags that say they are flame retardant free. The California Childcare Health Program has an information sheet on how to lower exposure to fire retardants. From Medical Xpress:

Flame retardant exposure found to lower IQ in children

A hazardous class of flame retardant chemicals commonly found in furniture and household products damages children's intelligence, resulting in loss of IQ points, according to a new study by UC San Francisco researchers. The study, published Aug. 3, 2017, in Environmental Health Perspectives, included the largest meta-analysis performed on flame retardants to date, and presented strong evidence of polybrominated diphenyl ethers' (PBDE) effect on children's intelligence. Despite a series of bans and phase-outs, nearly everyone is still exposed to PBDE flame retardants, and children are at the most risk," said UCSF's Tracey Woodruff, professor in the Department of Obstetrics, Gynecology and Reproductive Sciences.....

The findings go beyond merely showing a strong correlation: using rigorous epidemiological criteria, the authors considered factors like strength and consistency of the evidence to establish that there was "sufficient evidence" supporting the link between PBDE exposure and intelligence outcomes. Furthermore, a recent report by the National Academies of Sciences endorsed the study and integrated evidence from animal studies to reach similar conclusions that PBDEs are a "presumed hazard" to intelligence in humans.

Researchers examined data from studies around the world, covering nearly 3,000 mother-child pairs. They discovered that every 10-fold increase in a mom's PBDE levels led to a drop of 3.7 IQ points in her child." "Many people are exposed to high levels of PBDEs, and the more PBDEs a pregnant woman is exposed to, the lower her child's IQ," said Woodruff. "And when the effects of PBDEs are combined with those of other toxic chemicals such as from building products or pesticides, the result is a serious chemical cocktail that our current environmental regulations simply don't account for." The researchers also found some evidence of a link between PDBE exposures and attention deficit hyperactivity disorder (ADHD), but concluded that more studies are necessary to better characterize the relationship.

PBDEs first came into widespread use after California passed fire safety standards for furniture and certain other products in 1975. Thanks to the size of the Californian market, flame retardants soon became a standard treatment for furniture sold across the country..... Mounting evidence of PDBEs' danger prompted reconsideration and starting in 2003 California, other states, and international bodies approved bans or phase outs for some of the most common PBDEs. PBDEs and similar flame retardants are especially concerning because they aren't chemically bonded to the foams they protect. Instead, they are merely mixed in, so can easily leach out from the foam and into house dust, food, and eventually, our bodies. [Original study.]

Two more studies found that higher levels of vitamin D in the blood are associated with better health outcomes - one study found a lower risk of breast cancer, especially among postmenopausal women, and in the other - better outcomes after a metastatic melanoma diagnosis.

Two more studies found that higher levels of vitamin D in the blood are associated with better health outcomes - one study found a lower risk of breast cancer, especially among postmenopausal women, and in the other - better outcomes after a metastatic melanoma diagnosis. The

The  Once again a

Once again a  Should tackle football continue to be played in its current form? A

Should tackle football continue to be played in its current form? A  Did you know that you exchange some skin microbes with the person you live with? A

Did you know that you exchange some skin microbes with the person you live with? A  All of us at some point or another have wondered if we can hold on to medicines past their expiration date, or do we need to throw them out? And if they're still good past the expiration date, how much past the expiration date? Well... the investigative journalism site ProPublica has been examining this issue, and they published an article saying researchers and the government find that many medicines may be good for YEARS past the expiration date. Yes - years!

All of us at some point or another have wondered if we can hold on to medicines past their expiration date, or do we need to throw them out? And if they're still good past the expiration date, how much past the expiration date? Well... the investigative journalism site ProPublica has been examining this issue, and they published an article saying researchers and the government find that many medicines may be good for YEARS past the expiration date. Yes - years! The use of nanoparticles in foods is increasing every year, but we still know very little about whether they have health risks to humans, especially if one is eating foods with them daily (thus having chronic exposure). The

The use of nanoparticles in foods is increasing every year, but we still know very little about whether they have health risks to humans, especially if one is eating foods with them daily (thus having chronic exposure). The